Perception SDK

What “Perception SDK” means in industry (short)

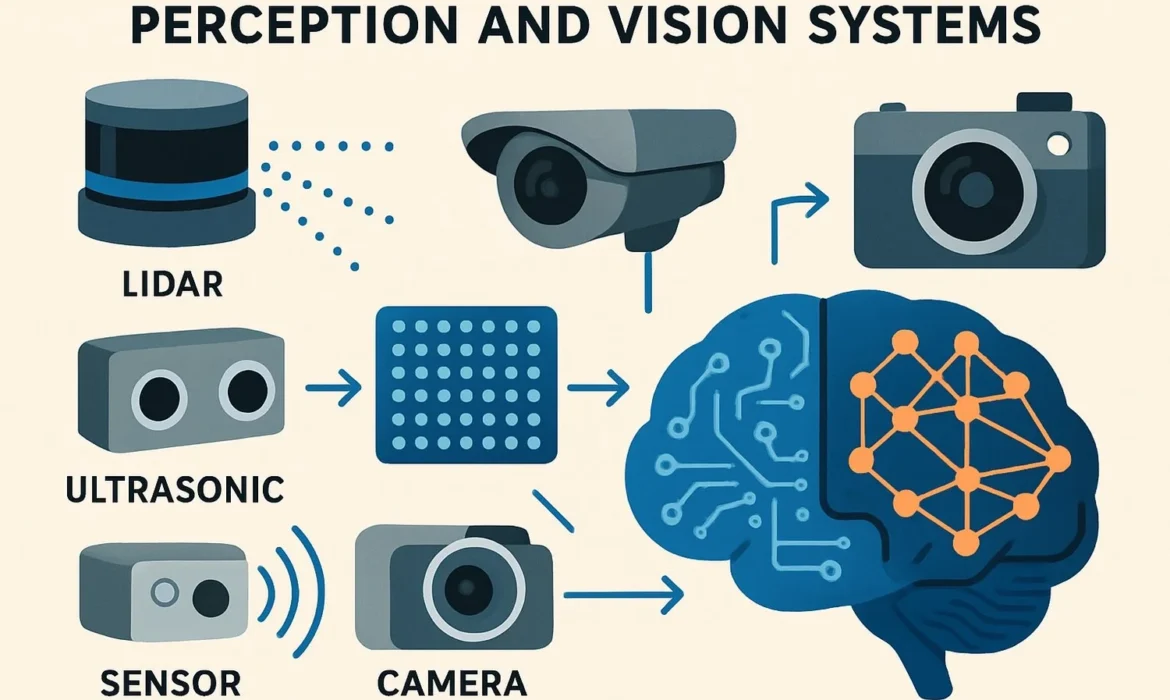

A perception SDK is middleware + libraries that let engineers convert raw sensor data (cameras, LiDAR, radar, IMU) into usable scene representations (2D/3D detection, tracking, semantic maps, occupancy, BEV, etc.). In industry these SDKs bundle sensor abstraction, calibration tooling, optimized inference runtimes, pre-trained models, and integrations with higher stacks (localization, planning). Examples in practice include NVIDIA DRIVE/DriveWorks, NVIDIA Isaac for robots, Intel OpenVINO for optimized inference, and Mobileye’s EyeQ/SDK for camera-first ADAS/AV implementations. NVIDIA Developer+1OpenVINO DocumentationMobileye

How robotics vs. autonomous-vehicle (AV) industries use perception SDKs

- Robotics (AMRs, manipulators): emphasis on real-time multi-camera odometry, 3D reconstruction, SLAM, object segmentation for manipulation & navigation; stacks often build on ROS/Isaac ROS + optimized inference (OpenVINO / TensorRT). NVIDIA’s Isaac ROS modules (e.g., Isaac Perceptor) are commonly used to accelerate robot perception on Jetson/AGX platforms. nvidia-isaac-ros.github.ioNVIDIA Developer

- Autonomous cars: heavier emphasis on multi-sensor fusion (camera+LiDAR+radar), BEV (bird’s-eye-view) perception, long-range tracking, safety/RT constraints, and automotive-grade middleware (autosafety, ISO 26262 considerations). Industry stacks tend to use vendor SDKs (NVIDIA DRIVE, Mobileye EyeQ + EyeQ Kit) or open-source stacks like Autoware and Autoware.Auto. NVIDIAMobileyeGitLab

Major commercial & open-source SDKs / platforms to study (quick list)

- NVIDIA DRIVE / DriveWorks / DRIVE SDK — full AV stack, sensor abstraction, accelerated perception modules and camera pipelines. NVIDIA Developer+1

- NVIDIA Isaac / Isaac ROS / Isaac Perceptor — robot-focused libraries, modules for multi-cam, odometry, 3D reconstruction. NVIDIA Developernvidia-isaac-ros.github.io

- Mobileye EyeQ & EyeQ Kit — vision-first SoC + SDK widely integrated into OEM ADAS and some AV programs. Mobileye+1

- Intel OpenVINO — inference optimization across CPU/GPU/NPUs, used in robotics and automotive prototyping. OpenVINO Documentationamrdocs.intel.com

- Autoware / Autoware.Auto — open-source AV perception & planning stack used by research teams and some pilots. GitLabAutoware

- Other vendors / ecosystems to check: Qualcomm/SAE platforms (Snapdragon Ride), Tier-1 suppliers (Bosch, NXP), and proprietary stacks at Waymo, Tesla, Cruise (research & limited public docs).

Recent AI advancements shaping perception SDKs (and what they change)

- BEV (Bird’s-Eye-View) methods & multi-camera fusion — converting multi-camera imagery into a unified top-down BEV representation for detection, segmentation, and prediction. BEV is now core to many AV perception pipelines. GitHubarXiv

- Transformer & spatiotemporal architectures — transformers (temporal + spatial) applied to perception (e.g., RetentiveBEV / BEV transformers) improve long-range context and motion modeling. SAGE Journals

- Self-supervised / semi-supervised learning & sim-to-real — methods to reduce expensive labeling, including retrieval-augmented / domain-adaptation approaches to bridge simulation and reality (important for industry dataset scale-up). arXiv+1

- Sensor-fusion improvements (radar + LiDAR + camera) and robustness to sensor failure — benchmarks now explicitly test corruption and sensor dropout. arXiv

- Edge/embedded acceleration — optimized runtimes (TensorRT, OpenVINO) and model compression are integrated into SDKs so perception can run within automotive/robotic hardware constraints. OpenVINO Documentationamrdocs.intel.com

Key datasets & benchmarks you should include in the research

- Waymo Open Dataset — large LiDAR+camera set used widely for perception benchmarks and challenges. arXivWaymo

- nuScenes / nuImages — multimodal dataset with camera, LiDAR, radar — useful for sensor-fusion studies. nuscenes.org+1

(Also consider KITTI, Lyft Level 5, Argoverse depending on historical comparisons and licensing.)

Typical industry integration points & engineering concerns

- Sensor abstraction & calibration (SDKs provide sensor-abstraction layers, time-sync, calibration tooling). NVIDIA Developer

- Real-time constraints & inference optimization (quantization, TensorRT/OpenVINO, specialized SoCs). OpenVINO Documentationamrdocs.intel.com

- Safety, explainability, & deterministic behavior — integration with safety frameworks and monitoring (e.g., redundancy, failover when sensors misbehave). (vendor whitepapers and automotive blog posts discuss these tradeoffs). NVIDIAMobileye

- Data lifecycle — from collection (publisher vehicle fleets / robots) → annotation → model training → continuous validation in closed-track and limited public roads. Waymo/nuScenes docs and DRIVE blogs are useful references.