The Eyes and Awareness of Autonomy

Vision & Sensing Systems

At Robotonomous, effective autonomy begins with superior perception. Our Vision & Sensing Systems are the eyes and awareness of next-generation robots and autonomous vehicles, providing the rich, reliable data necessary for intelligent action. We don’t just integrate sensors; we fuse their data into a singular, high-definition understanding of the real world. This forms the foundation of our Learning, Training, and Autonomy (LTA) platforms.

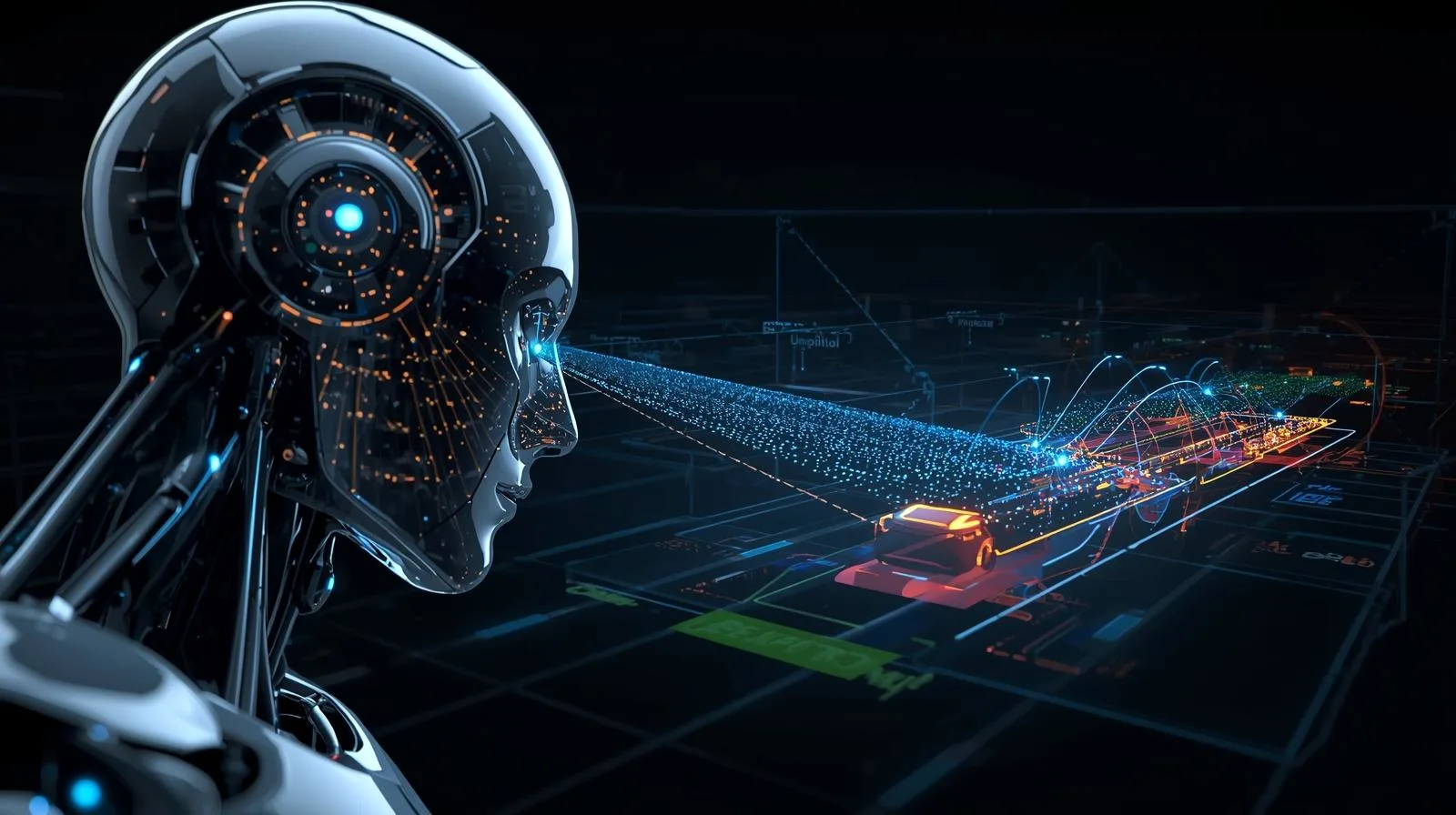

From Data Points to Unified World Models

High-Fidelity Sensor Fusion

The complexity of dynamic environments demands more than a single sensor reading. Our proprietary Sensor Fusion technology seamlessly integrates data from diverse sources—including cameras, LiDAR, radar, and inertial measurement units (IMUs)—to create a robust, unified world model.

- Real-Time Data Alignment: We synchronize and align asynchronous sensor inputs to eliminate latency and ensure decisions are based on the freshest possible information.

- Redundancy and Reliability: By cross-validating information across multiple sensor types, we build systems that are resilient to noise, weather, and sensor failure, ensuring precision and trust in every operation.

- Environmental Context: Our algorithms go beyond simple object detection to establish a comprehensive context, identifying objects, their speeds, trajectories, and their relationship to the autonomous agent.

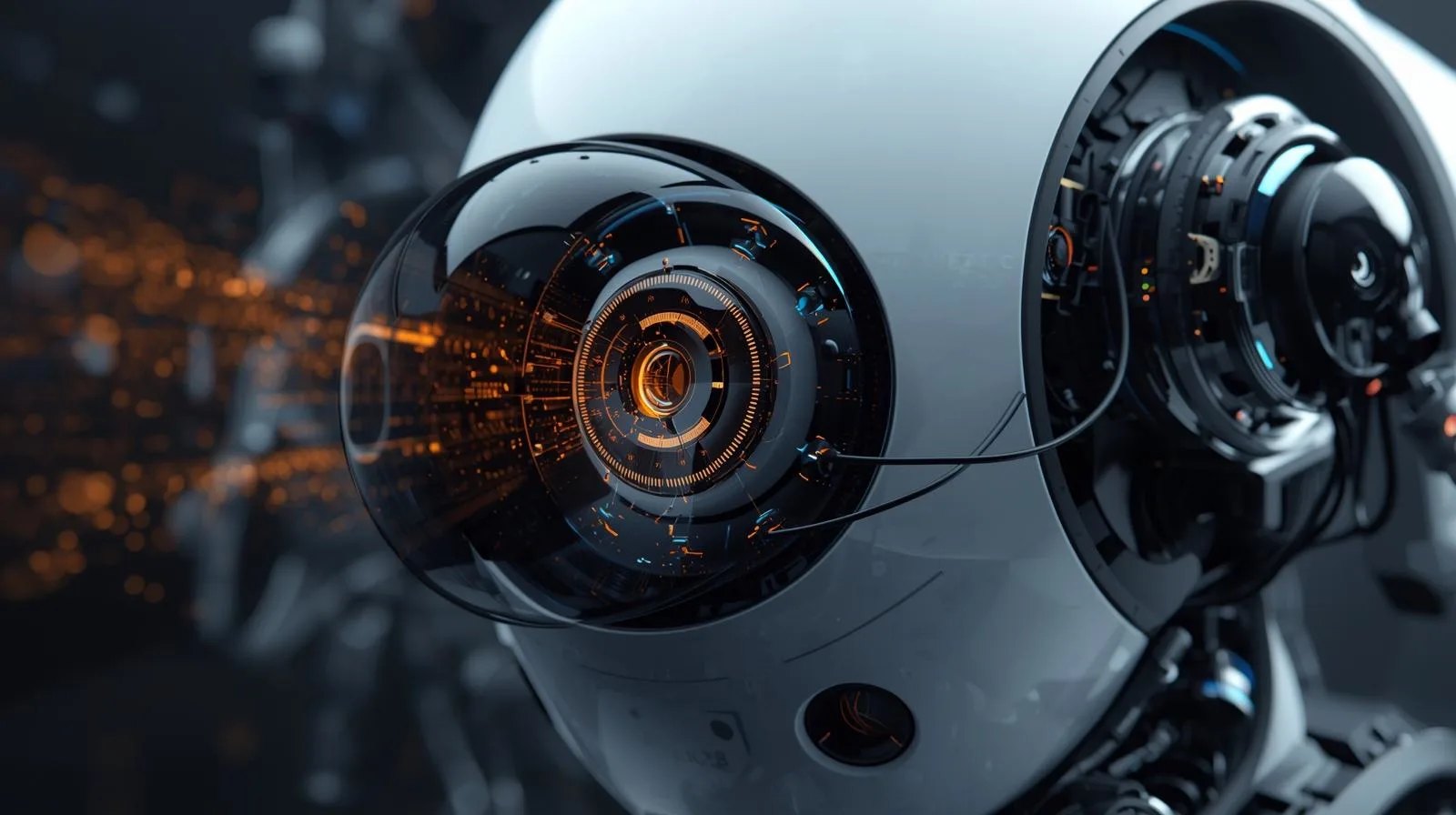

Perceiving the World with AI-Driven Clarity

Advanced Computer Vision Stacks

We build cutting-edge AI-based computer vision stacks that enable machines to interpret visual data with the same nuance and speed as a human, but with tireless consistency.

- Deep Learning Perception: Utilizing state-of-the-art neural networks, our systems achieve superior performance in object recognition, semantic segmentation (understanding what each pixel represents), and instance tracking in highly cluttered and dynamic scenes.

- 3D Reconstruction & Depth Perception: Our solutions precisely estimate depth and reconstruct the environment in three dimensions, critical for accurate path planning and obstacle avoidance, especially in unstructured environments.

- Behavioral & Intent Prediction: The vision stack processes visual cues not just to see what is, but to anticipate what will be, allowing robots and AVs to predict the intent of pedestrians, vehicles, and other agents for proactive and safe decision-making.