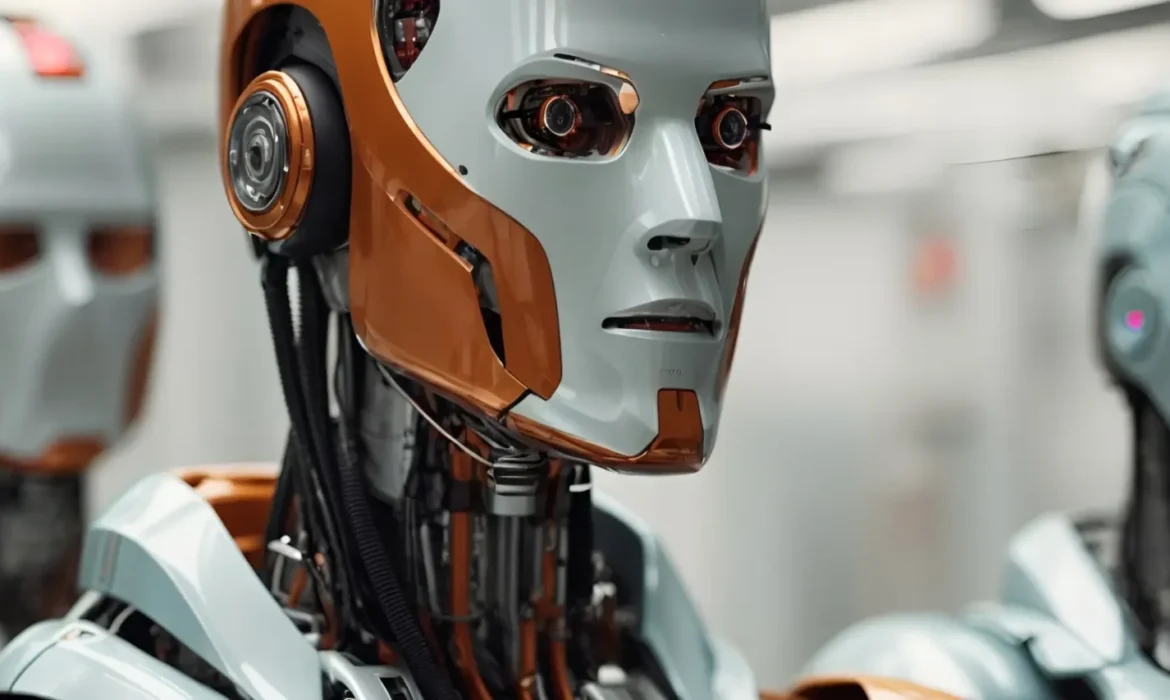

A machine is only as intelligent as its perception of the world. For truly autonomous operations—from a warehouse robot maneuvering tight aisles to a self-driving truck navigating dense US traffic—a comprehensive, accurate understanding of the environment is non-negotiable. This is achieved through the art and science of Sensor Fusion.

Why One Sensor Isn’t Enough

Individual sensors have inherent limitations. Cameras struggle with low light and glare; LiDAR can be confused by rain or fog; and radar lacks fine-grained object classification. Relying on a single input creates significant failure points, especially in diverse operational design domains (ODDs) like the rainy climate of the UK or the dusty conditions of a Chinese construction site.

The Robotonomous Fusion Advantage

Our Sensor Fusion & Autonomy Modules solve this challenge by intelligently combining the data streams from multiple sensor types. This process involves complex algorithms that weigh the reliability of each sensor’s input in real-time, creating a single, robust, 3D model of the world. The outcome is superior Robotics Perception that remains reliable under any condition.

Real-Time Data Processing at the Edge

Crucially, this fusion must happen instantaneously to enable real-time autonomy. We leverage our expertise in Embedded Systems & Firmware and utilize Edge AI Inference Modules to ensure that data is processed locally with sub-millisecond latency. There is no time to send critical perception data to the cloud when a quick decision—like braking or avoiding a collision—is required. This localized, high-speed processing ensures that the machine’s planning and control systems always act on the most accurate, current environmental understanding.

By providing this seamless, integrated perception layer, Robotonomous delivers the foundation upon which all reliable Full-Stack Autonomy is built.